A LLM serving engine extension to reduce TTFT and increase throughput, especially under long-context scenarios.

Project description

| Blog | Documentation | Join Slack | Interest Form | Roadmap

🔥 NEW: For enterprise-scale deployment of LMCache and vLLM, please check out vLLM Production Stack. LMCache is also officially supported in llm-d and KServe!

Summary

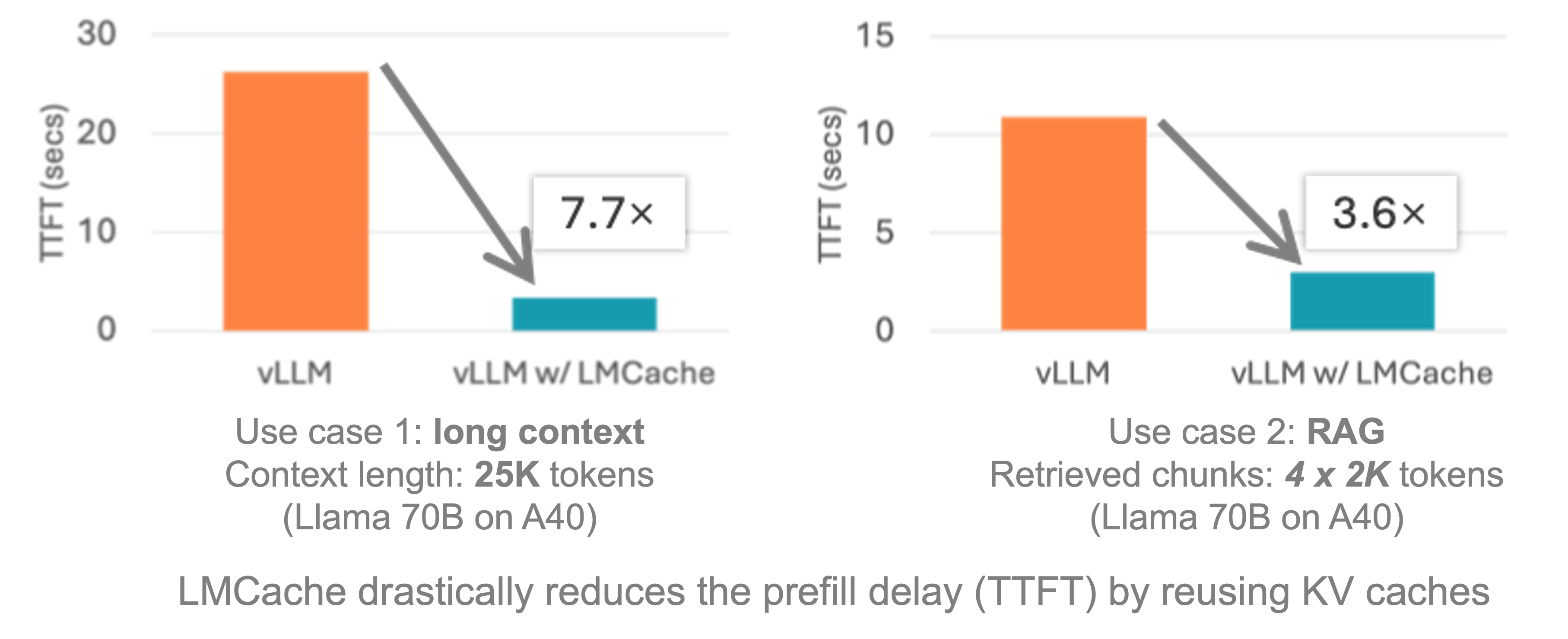

LMCache is an LLM serving engine extension to reduce TTFT and increase throughput, especially under long-context scenarios. By storing the KV caches of reusable texts across various locations, including (GPU, CPU DRAM, Local Disk), LMCache reuses the KV caches of any reused text (not necessarily prefix) in any serving engine instance. Thus, LMCache saves precious GPU cycles and reduces user response delay.

By combining LMCache with vLLM, developers achieve 3-10x delay savings and GPU cycle reduction in many LLM use cases, including multi-round QA and RAG.

Features

- 🔥 Integration with vLLM v1 with the following features:

- High performance CPU KVCache offloading

- Disaggregated prefill

- P2P KVCache sharing

- LMCache is supported in the vLLM production stack, llm-d, and KServe

- Stable support for non-prefix KV caches

- Storage support as follows:

- CPU

- Disk

- NIXL

- Installation support through pip and latest vLLM

Installation

To use LMCache, simply install lmcache from your package manager, e.g. pip:

pip install lmcache

Works on linux NVIDIA GPU platform.

More detailed installation instructions are available in the docs.

Getting started

The best way to get started is to checkout the Quickstart Examples in the docs.

Documentation

Check out the LMCache documentation which is available online.

We also post regularly in LMCache blogs.

Examples

Go hands-on with our examples, demonstrating how to address different use cases with LMCache.

Interested in Connecting?

Fill out the interest form, sign up for our newsletter, join LMCache slack, check out LMCache website, or drop an email, and our team will reach out to you!

Community meeting

The community meeting for LMCache is hosted bi-weekly. All are welcome to join!

Meetings are held bi-weekly on: Tuesdays at 9:00 AM PT – Add to Calendar

We keep notes from each meeting on this document for summaries of standups, discussion, and action items.

Recordings of meetings are available on the YouTube LMCache channel.

Contributing

We welcome and value all contributions and collaborations. Please check out Contributing Guide on how to contribute.

Citation

If you use LMCache for your research, please cite our papers:

@inproceedings{liu2024cachegen,

title={Cachegen: Kv cache compression and streaming for fast large language model serving},

author={Liu, Yuhan and Li, Hanchen and Cheng, Yihua and Ray, Siddhant and Huang, Yuyang and Zhang, Qizheng and Du, Kuntai and Yao, Jiayi and Lu, Shan and Ananthanarayanan, Ganesh and others},

booktitle={Proceedings of the ACM SIGCOMM 2024 Conference},

pages={38--56},

year={2024}

}

@article{cheng2024large,

title={Do Large Language Models Need a Content Delivery Network?},

author={Cheng, Yihua and Du, Kuntai and Yao, Jiayi and Jiang, Junchen},

journal={arXiv preprint arXiv:2409.13761},

year={2024}

}

@inproceedings{10.1145/3689031.3696098,

author = {Yao, Jiayi and Li, Hanchen and Liu, Yuhan and Ray, Siddhant and Cheng, Yihua and Zhang, Qizheng and Du, Kuntai and Lu, Shan and Jiang, Junchen},

title = {CacheBlend: Fast Large Language Model Serving for RAG with Cached Knowledge Fusion},

year = {2025},

url = {https://doi.org/10.1145/3689031.3696098},

doi = {10.1145/3689031.3696098},

booktitle = {Proceedings of the Twentieth European Conference on Computer Systems},

pages = {94–109},

}

Socials

License

The LMCache codebase is licensed under Apache License 2.0. See the LICENSE file for details.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distributions

File details

Details for the file lmcache-0.3.3.tar.gz.

File metadata

- Download URL: lmcache-0.3.3.tar.gz

- Upload date:

- Size: 937.1 kB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

f62aaa6f737aa3b7368f863115390080aa68a73dbb54654ac30ae2cf760b8d07

|

|

| MD5 |

bba00bec6a4ae347f4d8c36e7747d1ee

|

|

| BLAKE2b-256 |

3d80c037fb4e64a669ca51e303ad411257de1e3ff4d9df982ae7bbdf6aa6525a

|

Provenance

The following attestation bundles were made for lmcache-0.3.3.tar.gz:

Publisher:

publish.yml on LMCache/LMCache

-

Statement:

-

Statement type:

https://in-toto.io/Statement/v1 -

Predicate type:

https://docs.pypi.org/attestations/publish/v1 -

Subject name:

lmcache-0.3.3.tar.gz -

Subject digest:

f62aaa6f737aa3b7368f863115390080aa68a73dbb54654ac30ae2cf760b8d07 - Sigstore transparency entry: 346646626

- Sigstore integration time:

-

Permalink:

LMCache/LMCache@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Branch / Tag:

refs/tags/v0.3.3 - Owner: https://github.com/LMCache

-

Access:

public

-

Token Issuer:

https://token.actions.githubusercontent.com -

Runner Environment:

github-hosted -

Publication workflow:

publish.yml@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Trigger Event:

release

-

Statement type:

File details

Details for the file lmcache-0.3.3-cp312-cp312-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl.

File metadata

- Download URL: lmcache-0.3.3-cp312-cp312-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl

- Upload date:

- Size: 3.6 MB

- Tags: CPython 3.12, manylinux: glibc 2.24+ x86-64, manylinux: glibc 2.28+ x86-64

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

9542c92183255cf7772b01049ae1999c51efe579e455e89b1338ee6c26bb52a0

|

|

| MD5 |

ea34e9827f83f1e6926e5e5f3de6b0eb

|

|

| BLAKE2b-256 |

de5ef849513d4c976ba32f4a9304c1bb429c417fe1d3e2ae5fee0d985481fa03

|

Provenance

The following attestation bundles were made for lmcache-0.3.3-cp312-cp312-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl:

Publisher:

publish.yml on LMCache/LMCache

-

Statement:

-

Statement type:

https://in-toto.io/Statement/v1 -

Predicate type:

https://docs.pypi.org/attestations/publish/v1 -

Subject name:

lmcache-0.3.3-cp312-cp312-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl -

Subject digest:

9542c92183255cf7772b01049ae1999c51efe579e455e89b1338ee6c26bb52a0 - Sigstore transparency entry: 346646628

- Sigstore integration time:

-

Permalink:

LMCache/LMCache@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Branch / Tag:

refs/tags/v0.3.3 - Owner: https://github.com/LMCache

-

Access:

public

-

Token Issuer:

https://token.actions.githubusercontent.com -

Runner Environment:

github-hosted -

Publication workflow:

publish.yml@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Trigger Event:

release

-

Statement type:

File details

Details for the file lmcache-0.3.3-cp311-cp311-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl.

File metadata

- Download URL: lmcache-0.3.3-cp311-cp311-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl

- Upload date:

- Size: 3.6 MB

- Tags: CPython 3.11, manylinux: glibc 2.24+ x86-64, manylinux: glibc 2.28+ x86-64

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

a513fc5521717ffacbbaa0fa2255ca604cbabcec9357631a04bb950b8e4fca25

|

|

| MD5 |

b68fa891d61759dce75eb52a868b364b

|

|

| BLAKE2b-256 |

fc69587ba005799a81dfa5fe5618cc1d33ae156db366e53c8d0d923d31e20fbe

|

Provenance

The following attestation bundles were made for lmcache-0.3.3-cp311-cp311-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl:

Publisher:

publish.yml on LMCache/LMCache

-

Statement:

-

Statement type:

https://in-toto.io/Statement/v1 -

Predicate type:

https://docs.pypi.org/attestations/publish/v1 -

Subject name:

lmcache-0.3.3-cp311-cp311-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl -

Subject digest:

a513fc5521717ffacbbaa0fa2255ca604cbabcec9357631a04bb950b8e4fca25 - Sigstore transparency entry: 346646629

- Sigstore integration time:

-

Permalink:

LMCache/LMCache@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Branch / Tag:

refs/tags/v0.3.3 - Owner: https://github.com/LMCache

-

Access:

public

-

Token Issuer:

https://token.actions.githubusercontent.com -

Runner Environment:

github-hosted -

Publication workflow:

publish.yml@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Trigger Event:

release

-

Statement type:

File details

Details for the file lmcache-0.3.3-cp310-cp310-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl.

File metadata

- Download URL: lmcache-0.3.3-cp310-cp310-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl

- Upload date:

- Size: 3.6 MB

- Tags: CPython 3.10, manylinux: glibc 2.24+ x86-64, manylinux: glibc 2.28+ x86-64

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

97bebf9cdbec0b959deb3f3961616fa70ba11d4e66b3cc4a6bec0911ebce3898

|

|

| MD5 |

85c65b3b293a8781c303b2711f827080

|

|

| BLAKE2b-256 |

e47749b8c880be5a0e7766d0a0c141c9a4a3355d8927ef9289038fcdb134f3ab

|

Provenance

The following attestation bundles were made for lmcache-0.3.3-cp310-cp310-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl:

Publisher:

publish.yml on LMCache/LMCache

-

Statement:

-

Statement type:

https://in-toto.io/Statement/v1 -

Predicate type:

https://docs.pypi.org/attestations/publish/v1 -

Subject name:

lmcache-0.3.3-cp310-cp310-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl -

Subject digest:

97bebf9cdbec0b959deb3f3961616fa70ba11d4e66b3cc4a6bec0911ebce3898 - Sigstore transparency entry: 346646631

- Sigstore integration time:

-

Permalink:

LMCache/LMCache@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Branch / Tag:

refs/tags/v0.3.3 - Owner: https://github.com/LMCache

-

Access:

public

-

Token Issuer:

https://token.actions.githubusercontent.com -

Runner Environment:

github-hosted -

Publication workflow:

publish.yml@92e38375e8a2fc2ee401f2b2c1ed46651245ba3c -

Trigger Event:

release

-

Statement type: